A guide to localstack (part 3) - Automatic provisioning

10 Feb 2020

Requirements

It’s been a long time since I wrote the 2 previous articles on localstack. Some things were fixed by localstack in between and I updated the previous posts to reflect the changes. If you ended up here without reading them, I strongly advise you to do so and come back on this tutorial later.

To follow it you will need:

- the configuration files from the second part (lambda.zip file included)

- docker and docker-compose installed

Also make sure the docker network localstack-tutorial is present. If not create it with docker network create localstack-tutorial

You don’t even need to install Terraform or the AWS cli anymore, we can run everything in docker

Those in a hurry can get all final files used in this tutorial from this github repository.

Introduction

What do we want to achieve? As seen previously, even if deploying resources efficiently can be solved with Terraform, there are still some negative aspects to localstack:

- All resources are not persisted so you will have to apply the terraform configuration everytime localstack restarts.

- Running lambdas for the first time is slow. This is not an issue for asynchronous lambdas. However for lambda function that gets triggered by http requests and that are called synchrously, this is not as simple. The browser will be waiting for an http response and the request might time out before the lambda gets bootstrapped and runs for the first time. Also, the idle containers behaviour that makes lambda containers getting killed after 10 minutes of inactivity does not help.

To workaround these issues I came up with 2 solutions:

- Using docker events, we can provision localstack automatically by running terraform as soon as localstack is ready. As a developper you don’t even have to take care of initializing localstack anymore.

- We will use our own localstack container that prevent lambas containers to be destroyed. (03/03/2020 update : A pull request I opened on the localstack repository was approved. Building a custom image is not needed anymore. More on that further in this post)

Let’s work on it.

Creating the docker event listener container

What we want is a container that will listen to docker events. As soon as localstack starts, this container will trigger the terraform apply command to provision localstack.

The docker-compose service

In the docker-compose.yml file, add the service docker-events-listener.

services:

localstack:

...

container_name: localstack # 1

depends_on:

- docker-events-listener # 2

docker-events-listener:

build:

context: docker-events-listener-build # 3

volumes:

- /var/run/docker.sock:/var/run/docker.sock # 4

- ./terraform:/opt/terraform/ # 5

- We set a fixed name for the localstack container. When listening to docker events we need to know - in a predictable manner - which events involve the localstack container. Setting the container name is the easiest way to do this.

- The docker-events-listener service should be started before localstack (to be sure it can react to its events).

- We create a folder named docker-events-listener-build. It will be the context of the build, i.e. it will contains all files required to build the image

- The container will require an access to the docker socket to listen to events.

- The container will need access to the terraform resources.

Terraform files

As defined in the docker-compose file, create a folder named terraform, and move the localstack.tf and lambda.zip files in it.

mkdir terraform

mv lambda.zip terraform

mv localstack.tf terraform

The build files

The container will run AWS CLI and Terraform commands so we need to install them. The AWS CLI can lookup its configuration from files.

If not done already, create the docker-events-listener-build folder. Then create the following files inside.

Add a aws_config.txt file with the following content:

[default]

output = json

region = ap-southeast-2

Then a aws_credentials.txt file with the following content:

[default]

aws_secret_access_key = fake

aws_access_key_id = fake

Now the crucial part is the bash script that will be the container main process. This script listens to docker events and take actions accordingly, in this case it runs terraform apply as soon as localstack starts.

Below is the content of the script named listen-docker-events.sh

#!/bin/bash

docker events --filter 'event=create' --filter 'event=start' --filter 'type=container' --format '{{.Actor.Attributes.name}} {{.Status}}' | while read event_info

do

event_infos=($event_info)

container_name=${event_infos[0]}

event=${event_infos[1]}

echo "$container_name: status = ${event}"

if [[ $container_name = "localstack" ]] && [[ $event == "start" ]]; then

sleep 20 # let localstack some time to start

terraform init

terraform apply --auto-approve

echo "The terraform configuration has been applied."

fi

done

Thanks to the –filter options we only listen to events related to containers being started or created, and we format the ouput to display only the container name followed by the event name. The rest is pretty self explanatory.

Finally, here is the content of the Dockerfile to glue everything together.

FROM docker:19.03.5

RUN apk update && \

apk upgrade && \

apk add --no-cache bash wget unzip

# Install AWS CLI

RUN echo -e 'http://dl-cdn.alpinelinux.org/alpine/edge/main\nhttp://dl-cdn.alpinelinux.org/alpine/edge/community\nhttp://dl-cdn.alpinelinux.org/alpine/edge/testing' > /etc/apk/repositories && \

wget "s3.amazonaws.com/aws-cli/awscli-bundle.zip" -O "awscli-bundle.zip" && \

unzip awscli-bundle.zip && \

apk add --update groff less python curl && \

rm /var/cache/apk/* && \

./awscli-bundle/install -i /usr/local/aws -b /usr/local/bin/aws && \

rm awscli-bundle.zip && \

rm -rf awscli-bundle

COPY aws_credentials.txt /root/.aws/credentials

COPY aws_config.txt /root/.aws/config

# Install terraform

RUN wget https://releases.hashicorp.com/terraform/0.12.20/terraform_0.12.20_linux_amd64.zip \

&& unzip terraform_0.12.20_linux_amd64 \

&& mv terraform /usr/local/bin/terraform \

&& chmod +x /usr/local/bin/terraform

RUN mkdir -p /opt/terraform

WORKDIR /opt/terraform

COPY listen-docker-events.sh /var/listen-docker-events.sh

CMD ["/bin/bash", "/var/listen-docker-events.sh"]

If these 4 files are created in the docker-events-listener-build folder, keep reading.

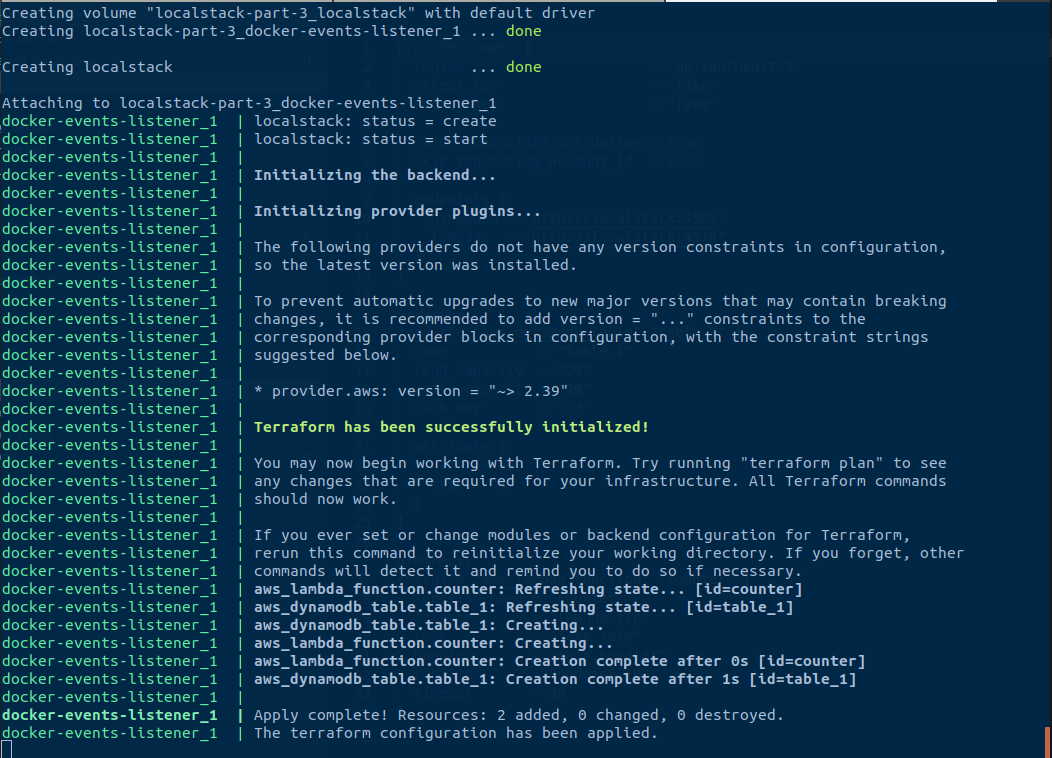

Docker events in action

Let’s build this new image and run the containers. To be sure everything is cleaned up before you can run docker-compose down -v.

docker-compose build

docker-compose up -d

docker-compose logs -f docker-events-listener

If you didn’t touch the terraform files, you should see the following error afer a few seconds:

What’s wrong? If you read the localstack.tf file carefully, you will notice that terraform is configured to reach the dynamodb service on localhost, which is the docker-events-listener container. Of course dynamodb is not available in the docker-events-listener container. It is accessible in the localstack one.

To fix this let’s update the localstack.tf file and replace every occurence of localhost with localstack. Why localstack? Because it matches the docker-compose service name.

As explained in the first tutorial, the docker documentation states that “a container created from a docker-compose service will be both reachable by other containers sharing a network in common, and discoverable by them at a hostname identical to the service name”.

If the value of container_name in the docker-compose configuration had been my_localstack, we would have replaced localhost by my_localstack. Docker will resolve the docker-compose service name with the ip of the container.

Let’s try again once localstack.tf has been edited:

docker-compose down -v

docker-compose build

docker-compose up -d

docker-compose logs -f docker-events-listener

If you get the following error:

You should use an older version of the aws provider for now. In this case add the following block in localstack.tf:

terraform {

required_providers {

aws = "~> 2.39.0"

}

}

After that, terraform should apply just fine!

Now that terraform can be executed within a container, you can even get rid of the port bindings.

ports:

- 4569:4569 # dynamodb

- 4574:4574 # lamba

If want to interact with these services using terraform or the awscli, you can use the docker exec command. Have a try at these:

docker exec -it localstack-part-3_docker-events-listener_1 aws lambda invoke --function-name counter --endpoint-url=http://localstack:4574 --payload '{"id": "test"}' output.txt

docker exec -it localstack-part-3_docker-events-listener_1 aws dynamodb scan --endpoint-url http://localstack:4569 --table-name table_1

Creating the lambdas containers on start

What I’m going to describe is something very specific to my setup and you might not need it at all.

In my development environment, lambda functions are triggered by http requests and called synchrously. The first time a lambda is called localstack spins up the container. This is a pretty slow process. By the time the lambda container is ready the http request times out. Thanks to the LAMBDA_EXECUTOR environment variable set to docker-reuse, localstack keeps the container alive for a while (10 minutes) so the next calls are handled much faster.

I chose to leverage docker events once again to improve my development environment. Thanks to environment variables, we can configure the behaviour of the docker-events-listener container directly in docker-compose.

For once, let’s start by the code - explanations will follow.

The docker-compose.yml file updated:

services:

localstack:

...

docker-events-listener:

...

environment:

APPLY_TERRAFORM_ON_START: "true"

INVOKE_LAMBDAS_ON_START: Lambda1 Lambda2 Lambda3

And the listen-docker-events.sh script

#!/bin/bash

docker events --filter 'event=create' --filter 'event=start' --filter 'type=container' --format '{{.Actor.Attributes.name}} {{.Status}}' | while read event_info

do

event_infos=($event_info)

container_name=${event_infos[0]}

event=${event_infos[1]}

echo "$container_name: status = ${event}"

if [[ $APPLY_TERRAFORM_ON_START == "true" ]] && [[ $container_name = "localstack" ]] && [[ $event == "start" ]]; then

terraform init

terraform apply --auto-approve

echo "The terraform configuration has been applied."

if [[ -n $INVOKE_LAMBDAS_ON_START ]]; then

echo "Invoking the lambda functions specified in the INVOKE_LAMBDAS_ON_START env variable"

while IFS=' ' read -ra lambdas; do

for lambda in "${lambdas[@]}"; do

echo "Invoking ${lambda}"

aws lambda invoke --function-name ${lambda} --endpoint-url=http://localstack:4574 output.txt &

done

done <<< "$INVOKE_LAMBDAS_ON_START"

fi

fi

done

- The first addition is the APPLY_TERRAFORM_ON_START environment variable. This is very useful whenever you wish to turn off automatic provionning and apply terraform configuration yourself.

- The second addition concerns the INVOKE_LAMBDAS_ON_START variable. For every word separated by a space, a lambda gets invoked (whose name is the word).

Updating the docker-compose configuration to work with the counter lambda we use from the beginning of these tutorials, here is what we get:

services:

localstack:

...

docker-events-listener:

...

environment:

APPLY_TERRAFORM_ON_START: "true"

INVOKE_LAMBDAS_ON_START: counter

Let’s start from scratch one more time:

docker-compose down -v

docker-compose build

docker-compose up -d

Wait a few seconds (a few minutes maybe..) then run docker ps: you should have a lambda container in the list!

💡 Using docker labels might be a better approach to solve this and could result in a cleaner bash script

Prevent lambdas containers to be destroyed

Even if we automated the lambda containers creation, what is the point if they get destroyed after 10 minutes? In my humble opinion, being able to configure the localstack behaviour on this subject would be the best solution. I opened a github issue on this matter and will update this post if it resolved.

03/03/2020 Update - I recently submitted a pull request which will be available in terraform version 0.10.8. You will just need to use the environment variable LAMBDA_REMOVE_CONTAINERS set to false to prevent lambda containers to get destroyed

Have a look at the final solution on github to see how every piece fit together!

Conclusion

Thanks to docker events, it’s possible to achieve many things that would seem impossible otherwise. At the same time, the configuration becomes very complex. Automatic provisioning hide the complexity of what’s happening within containers. When everything works fine this is awesome. But as soon as something goes wrong it takes time to investigate and fix issues, not to mention it requires a good understanding of docker internals. With this in mind, you will have to choose by yourself if you want to follow this path!

If you found this tutorial helpful, star this repo as a thank you! ⭐