A guide to localstack (part 2) - Deploying resources with Terraform

25 Aug 2019

Introduction

This article has been updated early 2020 with localstack in version 0.10.7 and terraform in version 0.12.20

In the previous post I explained the capabilities of localstack but there are still a few challenges. In this article I will explain how we can use Terraform to facilitate and speed up the deployement of AWS resources in the localstack environment. I will assume you already have the configuration files from the first part as this tutorial follows it directly. Make also sure the docker network localstack-tutorial is present.

You can get all files used in this tutorial from this github repository.

Setup

Install Terraform

You can download the proper package for your operating system and architecture from this page.

For instance to install terraform 0.12.20 on Ubuntu bionic, here are the steps to follow:

wget https://releases.hashicorp.com/terraform/0.12.20/terraform_0.12.20_linux_amd64.zip

unzip terraform_0.12.20_linux_amd64.zip

sudo mv terraform /usr/local/bin/terraform

sudo chmod +x /usr/local/bin/terraform

rm terraform_0.12.20_linux_amd64.zip

Run terraform --version to be sure you can use the terraform executable.

Terraform configuration

The first thing you’ll need is a terraform configuration file. Create a localstack.tf file:

touch localstack.tf

Just like we would do to deploy resources in AWS, we will use the aws provider. Add the following content to the file:

provider "aws" {

region = "ap-southeast-2"

access_key = "fake"

secret_key = "fake"

endpoints {

dynamodb = "http://localhost:4569"

lambda = "http://localhost:4574"

}

}

There are a few things that differ in comparison to a “normal” configuration of the AWS provider: the custom endpoints and the keys. The keys are required by the provider but are useless in our localstack context so you can set the values to any string you want.

Now run terraform init to download the plugin for the aws provider.

DynamoDB

Time to deploy a first resource. Of course, you will need localstack running. Start the container with:

docker-compose up -d

Add the following content to the localstack.tf file.

resource "aws_dynamodb_table" "table_1" {

name = "table_1"

read_capacity = "20"

write_capacity = "20"

hash_key = "id"

attribute {

name = "id"

type = "S"

}

}

The above configuration is the terraform equivalent to running the aws dynamodb create-table command as in the previous tutorial.

If you would like to make sure the table is absent from localstack, run this command.

aws dynamodb delete-table --endpoint-url http://localhost:4569 --table-name table_1

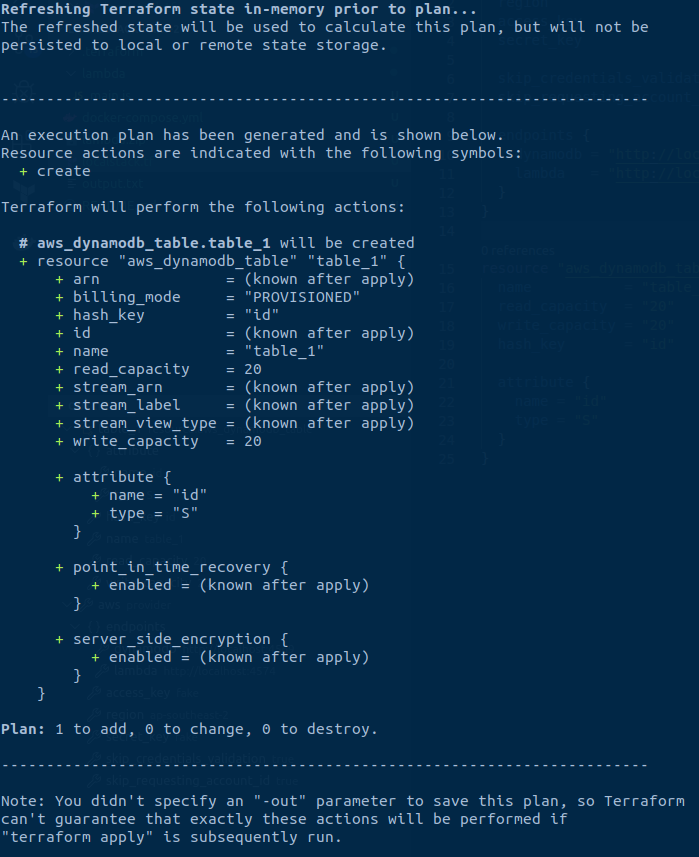

Now run terraform plan

Seeing this error?

Terraform is trying to validate the fake credentials but we don’t need them. You have to add the skip_credentials_validation = true line to the provider configuration to prevent this.

Run terraform plan again.

Boom! New error:

The AWS account ID is yet another AWS concept which is not implemented in localstack. This time you will need to add the skip_requesting_account_id = true line to the provider configuration to prevent this.

Another terraform plan and you should eventually have a proper plan.

You can now run terraform apply, type yes and that should be it!

Feel free to use the awscli to check if the table is present by running the following command:

aws dynamodb list-tables --endpoint-url http://localhost:4569

Running terraform plan once more will give you an empty plan just as expected.

Lambda

Here is the configuration to append to the localstack.tf file to deploy the counter lambda function.

resource "aws_lambda_function" "counter" {

function_name = "counter"

filename = "lambda.zip"

role = "fake_role"

handler = "main.handler"

runtime = "nodejs8.10"

timeout = 30

}

Before deploying this resource, let’s make sure it doesn’t exist anymore (in case you just created it from the previous post).

aws lambda delete-function --function-name counter --endpoint-url http://localhost:4574

Run terraform plan followed by terraform apply.

Let’s see if everything is working as expected. As the lambda function has not been triggered yet we should not find any item in the table:

aws dynamodb scan --endpoint-url http://localhost:4569 --table-name table_1

Execute the lambda and scan the table once more to see the changes.

aws lambda invoke --function-name counter --endpoint-url=http://localhost:4574 --payload '{"id": "test"}' output.txt

aws dynamodb scan --endpoint-url http://localhost:4569 --table-name table_1

I hope everything is running smoothly so far! Run terraform plan again to see the empty plan as it should be.

Adding other services

Adding new services should be very easy now. Let’s to deploy a simple resource: a sns topic.

The first thing to do is to update the docker-compose.yml file in order to start the SNS service in localstack:

- Map the port 4575 of your container to the same value on your host.

- add sns to the SERVICES environment variable value (do not forget to use the comma delimiter between services)

Then run docker-compose up -d to apply these changes.

Append the new resource in the localstack.tf file:

resource "aws_sns_topic" "my_topic" {

name = "TOPIC_NAME"

}

Update the provider block as well to add the SNS endpoint sns = "http://localhost:4575"

Then run terraform apply.

You can use the awscli this time as well to check if the topic is present:

aws sns list-topics --endpoint-url http://localhost:4575

Edge cases

Terraform is not a miracle solution and you will have to cater for a few edge cases. Some resources might not get created by Terraform just as you would expect. If this happens, here is a workaround.

Instead of creating a resource the “right way” such as:

resource "aws_sns_topic" "my_topic" {

name = "TOPIC_NAME"

}

You can achieve pretty much the same thing by declaring a null_resource and use the awscli internally. Replace the aws_sns_topic block with this one:

resource "null_resource" "my_sns_topic" {

provisioner "local-exec" {

command = "aws sns --endpoint-url=http://localhost:4575 create-topic --name TOPIC_NAME"

}

triggers = {

# Always create the topic

version = uuid()

}

}

Run terraform init to download the plugin for the “null” provider, then run terraform apply. This way, you don’t have to use the awscli for some resources and terraform for others.

Wrapping up

Your terraform configuration is now ready! This makes deploying the resources much easier. Just run terraform apply --auto-approve as soon as localstack is ready.

In localstack some resources are persisted on disk but some are not. You will notice after stopping and restarting localstack that the dynamodb table will be present immediately but you will still need to re-deploy the lambda function and the SNS topic.

Be careful when you stop the container as the state remains unchanged even if some resources are gone from disk (if you did not know already, terraform created a terraform.tfstate file containing the current state). This behaviour could mess with your deployments. A mechanism to delete resources that cannot be persisted when localstack stops could be handy.

Read the next post to see how we can go even further and automate the deployment of resources as soon as localstack is ready!

If you found this tutorial helpful, star this repo as a thank you! ⭐